I’ve said it before and I'll say it again: software development is in a terrible place. But you know what's even more terrifying? The security concept in AI. In our brave new world of Large Language Models, we've handed over the keys to the kingdom. We've built tools that give our AIs access to everything, assuming they'll be good little bots and only use the information they need. And then, we get stories like the one about Copilot Studio, where an "AIjacking" exploit led to a full data leak. All the company's knowledge, leaked. Why? Because the tools weren't built with Authorization in mind.

A lot of the tools and MCPs out there are "vibe-coded," built by someone to scratch a personal itch. They work great for a single user, a side project, or a small consumer app. But when you try to bring them into the enterprise, everything goes boom. Suddenly, a simple tool that needs to access a single file on a server becomes a whole production. You have to write a custom server for every little task, manage a dozen different secrets, and build a whole permission system just so one team can't read another team's data.

The promise was simple, right? A server that just does one thing and does it well. But what if that one thing needs to access different data for different users or different teams? Suddenly, the "simple" server isn't so simple. We were about to build a whole new service just to handle group-based file access for our MCPs, and honestly, the thought of it gave me a headache. All that boilerplate, all those tests, all that... sigh.

Solving a Problem We Didn't Ask For

Luckily we already have the Enterprise MCP Bridge. Now, don't get me wrong, this isn't some magical solution that will fix all your problems. It's not a silver bullet, and it's certainly not a free lunch. But it does get us a little bit closer to a saner world. So we just released a new feature that lets you use a single MCP server set up for easy single-user deployments for multiple users and groups without having to write a line of extra code.

Take the server-memory mcp for instance. Ever wonder of a safe way to deploy something that starts with MEMORY_FILE_PATH="/data/memory.json" npx -y @modelcontextprotocol/server-memory? Because after you have deployed that, everyone will have access to the same memory. So, what to do next? Deploying a separate instance for every single user or group? A finance team would get their own server, a marketing team would get theirs, and so on. It’s a deployment and update nightmare, a resource hog, and a classic example of solving a simple problem with a sledgehammer.

With the new Group-Based Data Access we've solved this by adding a bit of magic to the MCP_SERVER_COMMAND or MCP_ENV_ variables. Now, you can use placeholders like {data_path}. The bridge, being the intelligent beast that it is, looks at the user's OAuth token, figures out what group they belong to, and dynamically swaps out that placeholder with the correct file path. So export MCP_ENV_MEMORY_FILE_PATH=/data/{data_path}.json; npx @modelcontextprotocol/server-memory magically changes the path to /data/g/finance.json for a finance user. You want your own, private, memory? It will change the path to /data/u/<your user>.json right away! All you need is a huge drive that you can connect it to.

And the same goes for other MCPs as well! Thinking about using the database MCP? Do node dist/src/index.js --sqlserver --database {data_path} and you can - without leaking your database to the entire company!

No more spinning up a thousand tiny servers. No more complex, custom routing. No more begging the DevOps team for new environments. It just... works.

A Modest Proposal

I know what you're thinking. This sounds too simple. What about security? What about unauthorized access? What if someone tries to be clever and request a file they're not supposed to? Well, the bridge handles all that for you. It's not like your users can just type in any path they want. The system validates group membership against the JWT token. If you're not in the "finance" group, you simply can't access the "finance" data. It's beautiful in its simplicity.

This isn't a new paradigm, or some grand architectural shift. It's a small, sane step forward in a world that seems determined to make things unnecessarily complicated. It's about bringing back a bit of common sense to MCP hosting.

This won’t solve all of your problems - storing data coming in from potentially multiple distributed requests in file, for instance, might lead to lovely race-conditions and broken files. But don’t worry, we already solved that part in our platform that we will release at a later stage.

Let’s remember how code made working with information easier in the first place. We don’t need to throw the baby out with the bathwater just because we have LLMs now. The future of AI is bright, but it’s even brighter (and more reliable!) with some solid old-school software engineering.

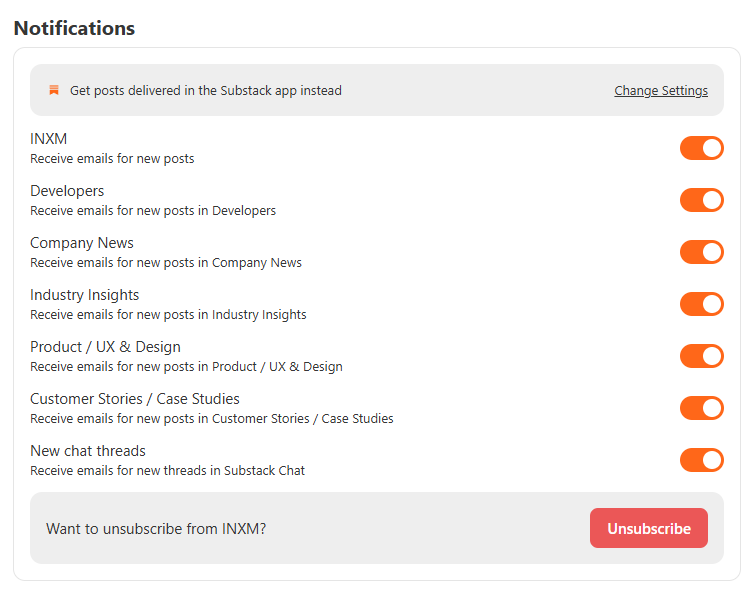

More interested in company or product news rather than development? Choose which sections you are interested in. Step by Step we will fill them all!