Alright, team, strap in. We've got a new vesion released of the Enterprise MCP Wrapper and it's a big one. For those of you who've been with us on this journey, you'll know we've always been about providing a solid, open-source component for our enterprise customers. We're giving you the tools to bridge the gap between your applications and the power of the MCPs. But this time, we've gone a step further. We've taken the first serious swing at making this thing a plug and play solution for creating agents.

We've all seen the agent buzz. Everyone and their dog has an "agent" that does something, somewhere. A lot of times, that "agent" is basically just a glorified prompt. Our goal with this release is to augment your MCP deployment with the functionality to make the tool knowledge available and discoverable by agentic or traditional orchestrations via a conversational interface. We looked at what others are doing, and to be honest, we were struggling to incorporate them into our platform. There's a whole lot of theory, nice ideas, and exciting PoCs, but when you're in the trenches, you need something that just works. And that's what we've been working on.

This new release is a big one: Roughly 8,000 additions, for three new endpoints! And while the sheer amount of code may seem like complexity for complexity's sake, this is a lot of functionality that has been tested and proven to work with tool-enabled models from small 8B models to the big ones. It has a lot of guardrails, tracing, and logging so that you never miss a failure or are lost at 3 a.m. in the morning trying to figure out why THIS GODDAMN THING stopped working.

It's All About Being a Good Host: The New Endpoints

The core of this release is all about making the Enterprise MCP Bridge a good host for your agents. We’ve introduced a few new key endpoints to make this happen:

GET /.well-known/agent.json: This dynamically generated file is the agent's business card. It's A2A compliant and contains all the metadata about your service, its skills, and the tools it can use. It's the first step to making your agent discoverable and easy to integrate with other A2A-compliant systems. Think of it as your agent's LinkedIn profile.POST /tgi/v1/a2a: An A2A-compliant endpoint for JSON-RPC requests, ready for interoperability with other services.POST /tgi/v1/chat/completions: This is the big one for your chat. An OpenAI-compatible endpoint that allows for automatic agentic workflow execution. It’s the "it just works" button for your agents. You send a chat completion request, and our component handles the tool integration and execution for you. It supports both streaming and non-streaming modes, because who doesn't love watching text appear in real-time?

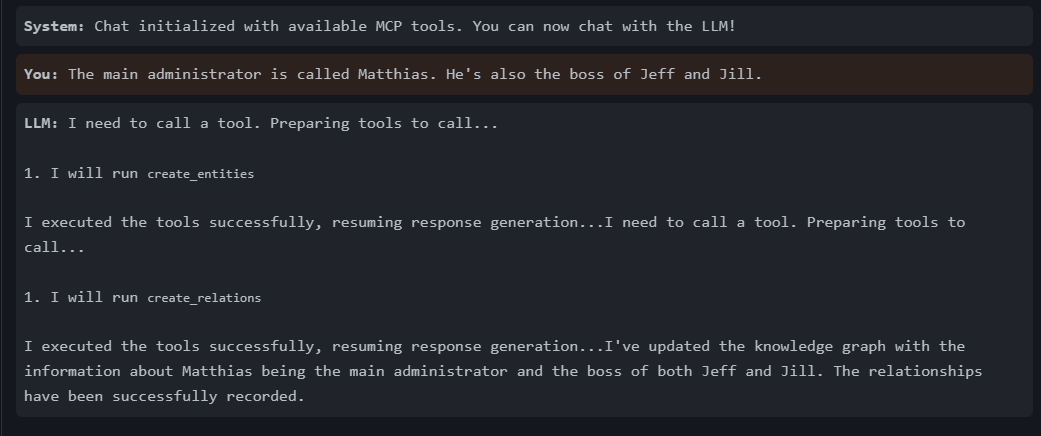

The idea here is to abstract away the complexity. Rather than taking care of the tool calling yourself, the agent is aware of the mcp context it operates in, is well primed with a well defined prompt, and it handles the dirty work behind the scenes. It’s agents - in easy, traceable, and kubernetes-ready. If you want to try it out, run ./start.sh in either the memory-group-access or token-exchange-m365 example project, and see it in action in the chat.

Trust, but Verify: Observability and Auditing

Of course, with great power comes great responsibility. The ability to run complex, multi-step agentic workflows in the background is awesome, but it can also be a little opaque. We believe that transparency and auditability are non-negotiable for enterprise software.

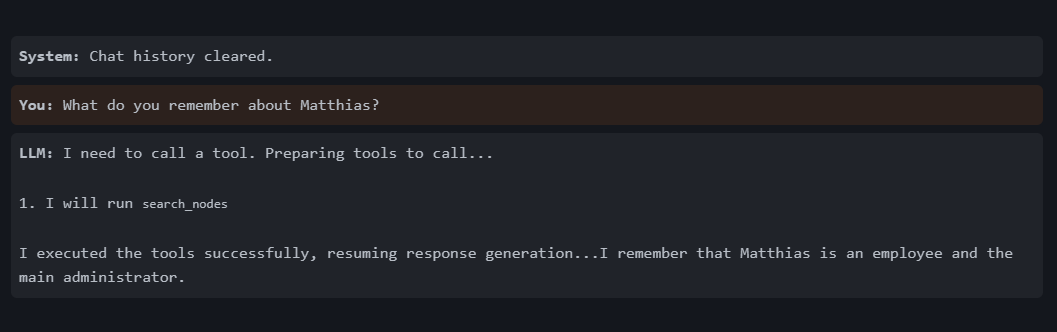

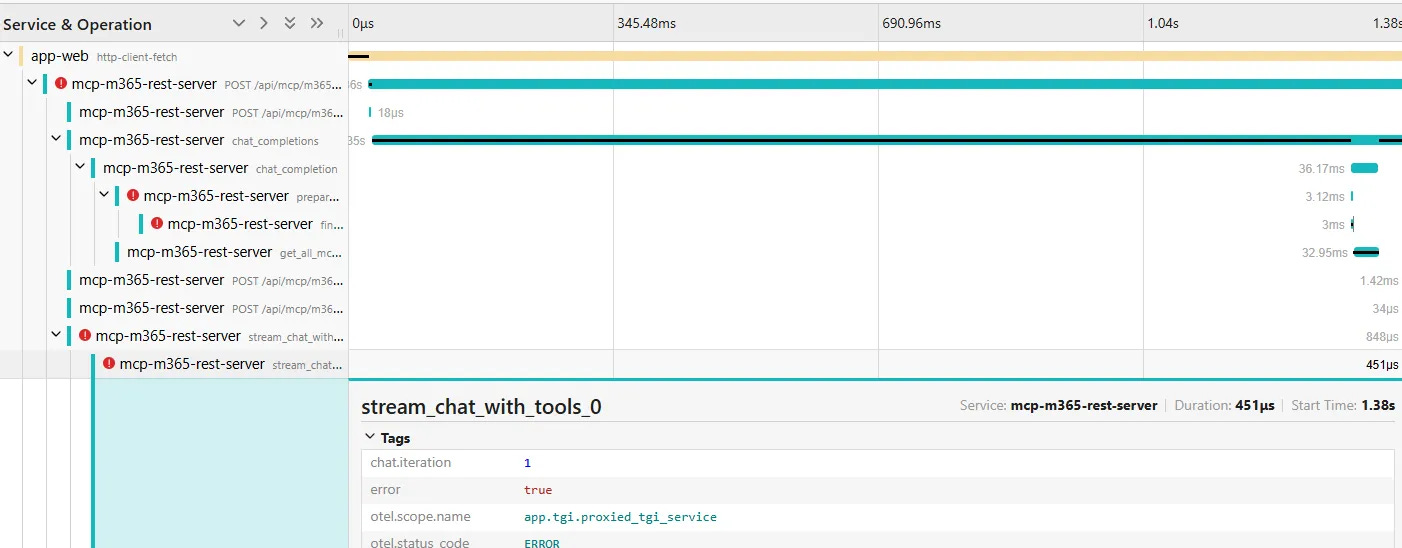

We've worked hard to ensure that every action, every decision, and every error an agent makes is traceable. Using tools like Jaeger, you can visualize the entire execution flow, see exactly which tools were called, and identify where things went wrong.

This level of observability is critical. It's one thing to have a black box that spits out a result, but it's another thing entirely to know exactly why it did what it did. This allows you to debug failing workflows, optimize agent performance, and, most importantly, have a clear audit trail for compliance and security. No more guessing games. The token example comes with a Jaeger deployment, so if you want to see it for yourself, check it out!

Let’s get started - How to Use It

Ready to give it a go? The new agent features are enabled with a few simple environment variables:

TGI_API_URL: Point this to your OpenAI-compatible endpoint.TGI_API_TOKEN: Your token, if needed.TGI_MODEL_NAME: The model you want to use.

Once those are set, you're good to go. The /tgi/v1/chat/completions and /tgi/v1/a2a endpoints will automatically take care of the tool invocation for you. And for those of you who are perfectionists (like me), we've also added a PromptService to manage system prompts and templates. This allows you to define a specific role for your agent and ensure it always starts with the right context. And, provided the MCP has prompts already available, you can select them as well! Why is this so important? Because a good prompt can make or break your agent. Without the right initial instructions, your agent might not know which tools to use or what its ultimate goal is. By providing a clear system prompt, you set the stage and give the agent the guidance it needs to be effective.

This is a big step, and it's a testament to the hard work of the team. We've taken a complex, reusable solution and made it as simple as possible. It's still a work in progress, with quite a few rough edges, but we believe in shipping fast and iterating.

And on that note, if you see a bug, please don't yell at us but create an issue on github and we’ll ask our brand new agent to fix it! But seriously, this is just the beginning, let’s build it together!